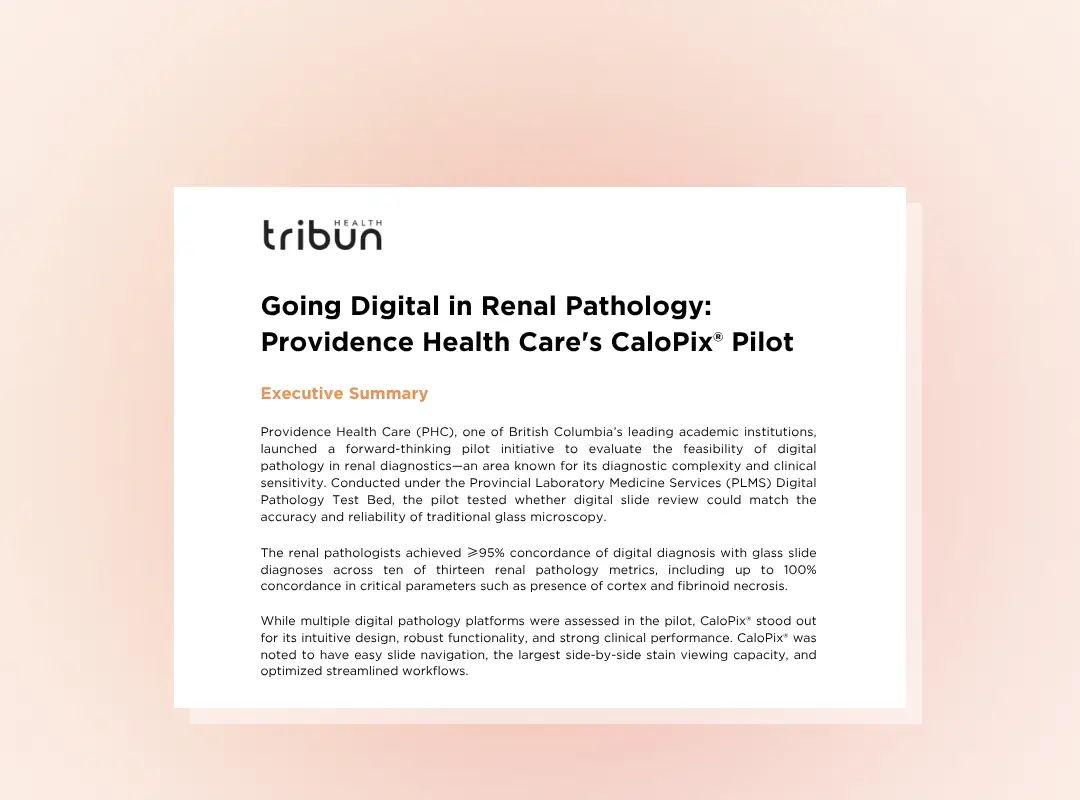

Digital Renal Pathology: Providence Health Care CaloPix® Pilot

Published on 13/11/2025

White Papers

Digital Renal Pathology: Providence Health Care CaloPix® Pilot

Published on 13/11/2025

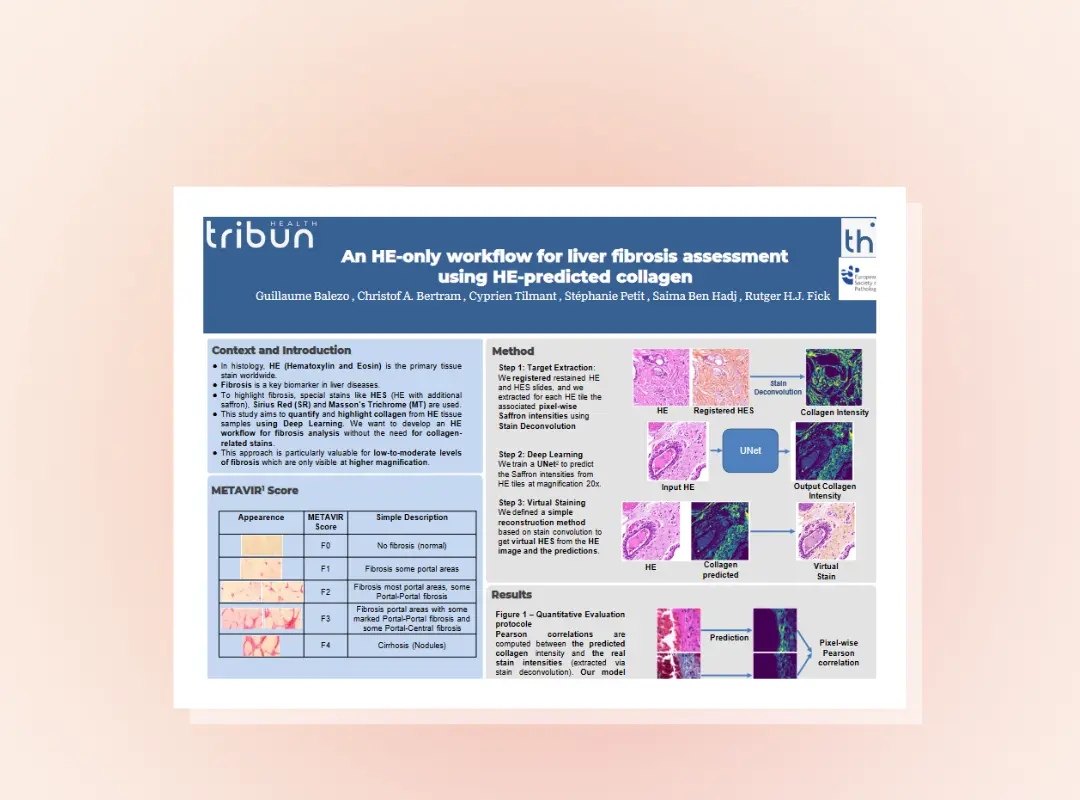

Scientific Posters

Assessment of hepatic fibrosis using HE staining

Published on 14/09/2023

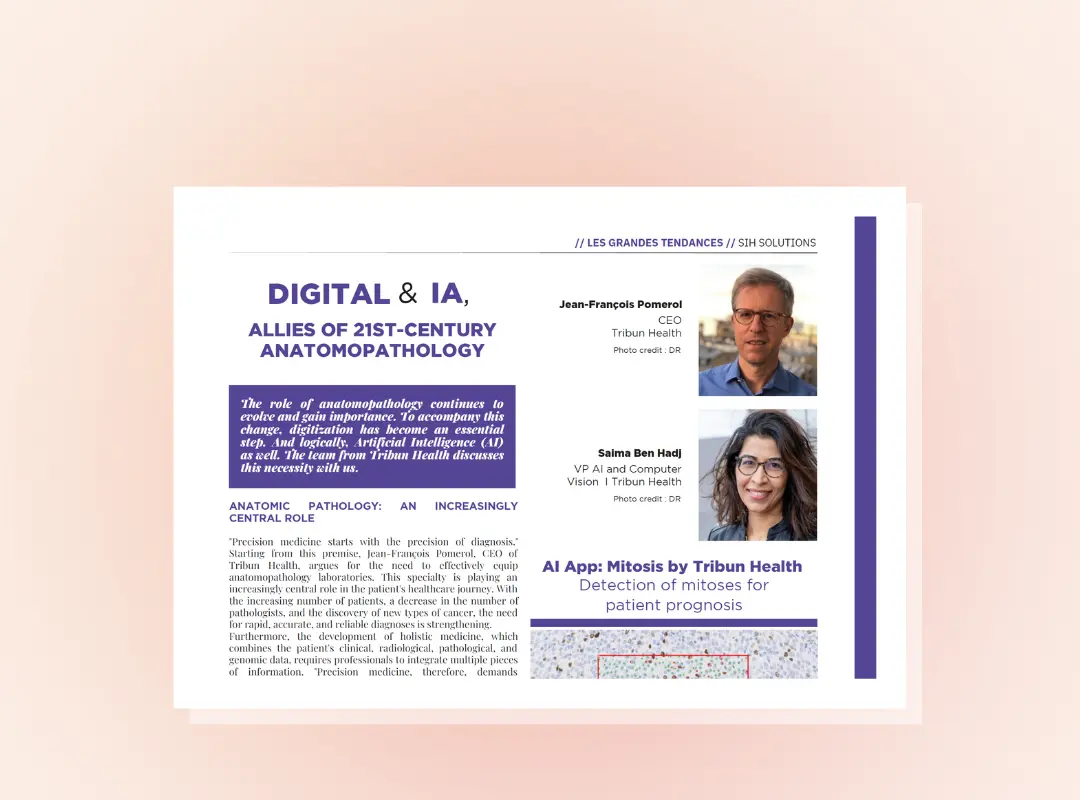

Articles

Digital & AI, Allies of 21st-Century Anatomopathology

Published on 31/05/2023

Articles

Hospital Digitization: A Pathology Parallel with Laurent Treluyer

Published on 18/01/2023

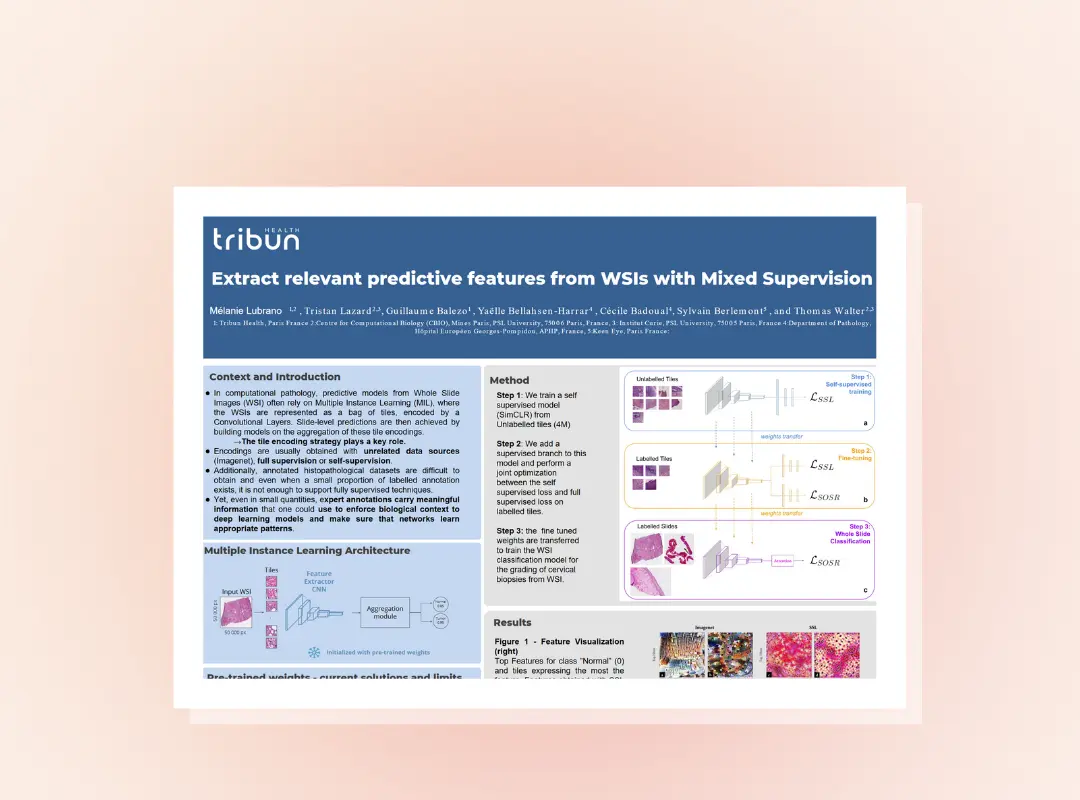

Scientific Posters

Extract relevant predictive features from WSIs

Published on 30/11/2022

Scientific Papers

Mitosis Domain Generalization in Histopathology

Published on 25/11/2022

Scientific Papers

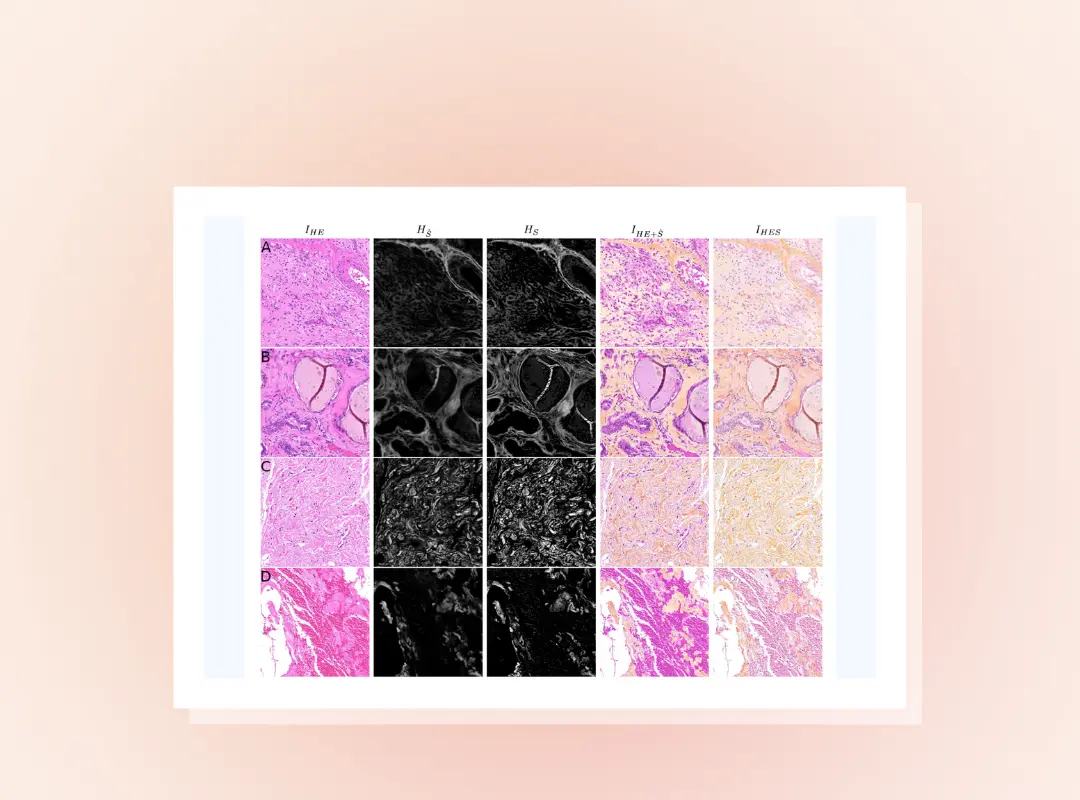

Collagen Prediction and Virtual Saffron Staining

Published on 18/11/2022

Scientific Papers

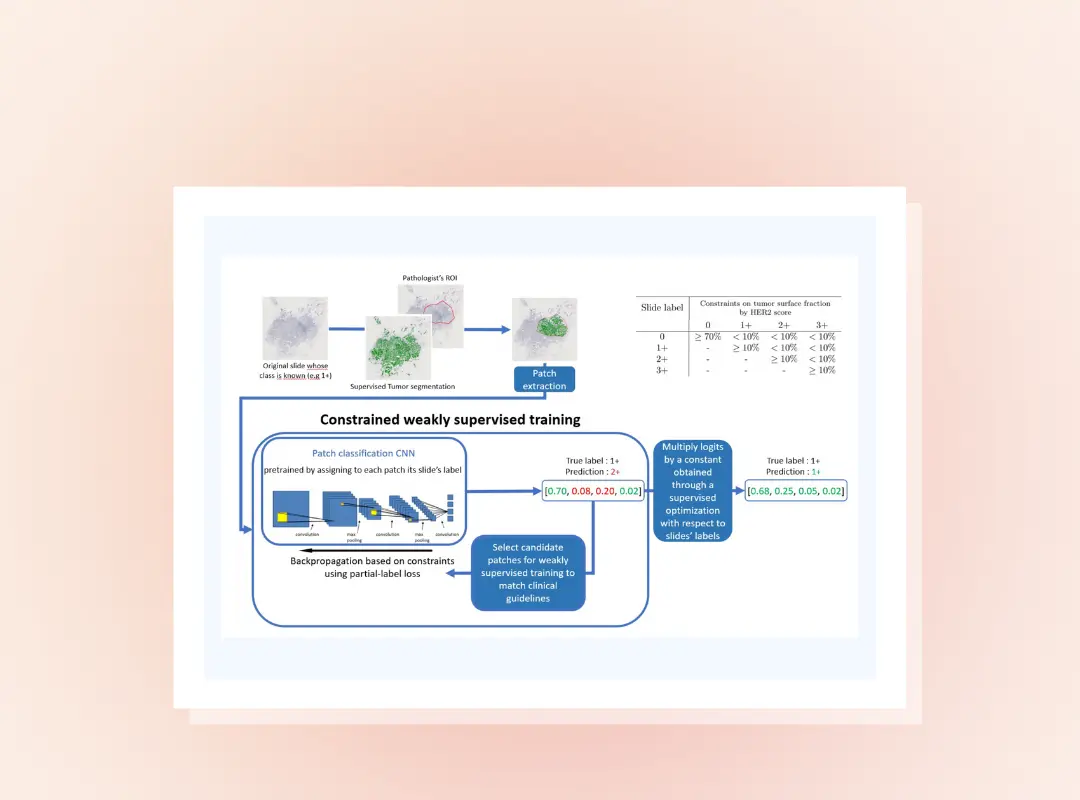

Understanding HER2 Scoring via Clinical Guidelines

Published on 18/11/2022

Articles

Tribun Health 'Best in Klas' for digital pathology at HIMSS in Orlando

Published on 03/05/2022